Robots.txt: do you really know what it is for and how to use it correctly?

In this article, we will explore in detail what it is and how it works along with practical examples and tips for maximizing your site’s visibility on search engines.

What is the robots.txt file?

The robots.txt file is a document in text format (.txt) that allows you to specify to search engine crawlers which pages of your site should be crawled.

With the robots.txt file you can tell user agents which resources can be scanned and which should be ignored.

What is a user agent?

A “ User-agent ” is software that represents the user in a request to the server. In other words, it is a program that communicates with a website or application on your behalf, sending requests and receiving responses.

Examples of User-agents include web browsers such as Chrome, Firefox and Safari.

Robots.txt: how does it work?

Now that you are clear about the main purpose of the robots.txt file, let’s look at some limitations in using this precious resource.

As reported in the official Google documentation, it is necessary to take into account some fundamental aspects:

- First, not all search engines support robots.txt file rules . The instructions contained in the file cannot dictate the behavior of the crawler, but only ask the crawler to respect them. This means that some web crawlers may not follow the instructions in the file.

- There may be differences in the interpretation of the file syntax depending on the crawler. It is therefore important to know the most appropriate syntax to use for different web crawlers to avoid confusion.

- Even if a page is blocked by robots.txt, it may still be indexed if other sites include links to that page.

- The Robots.txt is case sensitive: the file must be called “robots.txt” (not Robots.txt, robots.TXT or otherwise).

Other useful information:

- The robots.txt file must be placed in the top-level directory of a website, in the root of the web server (example.com/robots.txt).

- We recommend that you specify the location of any sitemaps for your domain at the bottom of the robots.txt file.

- Each subdomain must have its own robots.txt file.

What is the syntax of robots.txt?

The syntax of robots.txt consists of 5 terms:

- User-agent: serves to specify the search engine crawler subjected to the directives set in robots. If the “*” symbol is specified as the User-agent, the directives are assigned to all crawlers.

- Sitemap (optional): Indicates the location of the sitemap.xml to search engines.

NB: the pages intended for scanning and indexing (appearance in the search engine index) are inserted into the sitemap.

Furthermore, the sitemap URL must be reported completely and not in a relative manner (e.g. Sitemap).

- Allow: Tells crawlers which pages can be crawled.

- Disallow: Tells crawlers which pages should not be crawled.

- Crawl-delay: directive used to indicate how many seconds must pass between one request and another, before crawling the content of the page.

The crawl-delay directive is not supported by Googlebot. In the past, Googlebot has used this directive to limit request rate to avoid overloading websites.

However, Googlebot currently uses other methods to limit request rate. Therefore, the crawl-delay directive is no longer needed and is no longer supported.

Other characters used in the robots.txt syntax:

- * is a wildcard character representing any sequence of characters.

- $ matches the end of the URL.

NB: For each line, there must be only one disallow/allow command.

Some examples of using the robots

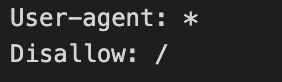

Example 1: Block crawling of an entire site

In this example, not all User-agents are allowed to crawl the site.

Example 2: Allow the entire site to be crawled

In this example, all User-agents are allowed to crawl the site.

Example 3: Block scanning of a folder

In this example, all crawlers are not allowed to crawl the /cgi-bin/ folder.

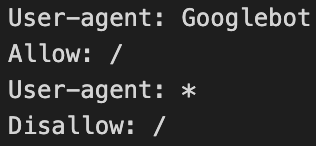

Example 4: Allow scanning only for a specific User-agent

In this example, only Googlebot is allowed to crawl the site. All other search engines cannot have access to the domain.

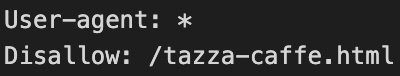

Example 5: Block crawling for a particular page

In this example, crawling of the /tazza-caffe.html page is not allowed.

Example 6: Block scanning for certain types of resources

In this example, the disallow set does not allow scanning of all resources ending with “.pdf”.

Robots.txt: Mistakes to avoid

Attention! There are some common mistakes you might make when using the robots.txt file.

Here are the 5 most common:

- Leave the robots file empty

- Block useful resources

- Try preventing pages from being indexed

- Disallow a page containing a noindex tag

- Block page containing other tags

1. Leave the robots file empty

The robots.txt file, according to Google guidelines, is only necessary if we want to prevent crawlers from crawling the site. If there are no pages or links that we want to keep hidden, there is no need to create a robots.txt file.

Additionally, sites without a robots.txt file, robots meta tag, or X-Robots-Tag HTTP headers will still be crawled and indexed by search engines.

Therefore, if there are no sections or URLs that you want to exclude from crawling, you do not need to create a robots.txt file and especially do not create an empty resource.

2. Block useful resources

The biggest risk of using the robots.txt file incorrectly is preventing the crawling of important website resources and pages that should be easily accessible to Googlebot and other crawlers for the project to be successful.

This may seem like a trivial mistake, but it often happens that potentially important URLs are accidentally blocked due to incorrect use of the robots.txt file.

3. Try to prevent pages from being indexed

Many times there is a misunderstanding of the usefulness and functioning of the robots.txt file and it is thought that entering a URL in “disallow” can prevent the resource from appearing in search results.

However, this is not accurate. Using robots.txt to block a page does not prevent Google from indexing it and does not remove the resource from the index or search results.

4. Disallow a page containing a noindex tag

Blocking bots from pages with a noindex meta tag makes the tag itself ineffective, since bots can’t identify it. This can lead to pages with noindex meta tags appearing in search results.

To prevent a page from appearing in search results, you need to implement the noindex meta tag and allow bots access to that page.

5. Block pages containing other tags

As we have already mentioned, blocking a URL prevents crawlers from reading the contents of the pages and also from interpreting the commands you set, including the important ones mentioned above.

To allow Googlebot and similar to correctly read and consider the status codes or meta tags of URLs, it is necessary to avoid blocking these resources in the robots.txt file.

What are the differences between Robots.txt, x-robot tag and meta robots

Here in brief are the differences between Robots.txt, x-robot tag and meta robots:

- The robots.txt file allows you to influence the crawler’s behavior and crawling activity when faced with certain resources.

- HTTP header x-robot tag can determine how content appears in search results (or to ensure it isn’t shown). The directive can be declared via the HTTP header.

- The robots meta tag can also determine how content appears in search results (or to ensure it isn’t shown). The directive is inserted into the HTML of the single page.

How to test the robots.txt file

To test a website’s robots.txt file you can use the Google Search Console.

Google Search Console (GSC) is a free tool offered by Google that allows website owners to monitor and improve their presence on search engines.

GSC also includes a robots.txt file check feature, where you can check whether the file has been configured correctly and whether there are any problems with it.

o access and use the tool, follow these simple steps :

- Access the following link

- Select your property

- Enter the URL of the page you want to test in the box at the bottom of the page

- Select the desired User-Agent with the dropdown next to the input box

- Click the “TEST” button

If “ALLOWED” is returned , then it means that crawling is allowed for the tested page. However, if “BLOCKED” is returned , it means that scanning is not allowed for the tested page.

As an alternative to Google Search Console, Merkle ‘s robots.txt file testing tool is available .